The wiki replaces old AI Impacts pages. Old pages are still up, but for up to date content see wiki versions.

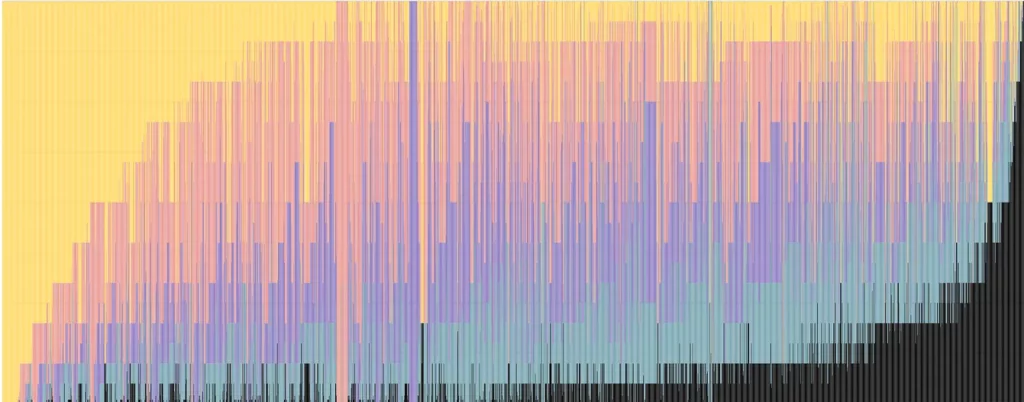

- by Ben Weinstein-RaunWith new charts, and a newly open-source codebase

- by Owen Cotton-BarrattWe’re delighted to announce the winners of the Essay competition on the Automation of Wisdom and Philosophy.

- by Katja GraceRecently, Nathan Young and I wrote about arguments for AI risk and put them on the AI Impacts wiki. In the process, we ran a casual little survey of […]