All pages and blog posts

Blog

Blog

Blog

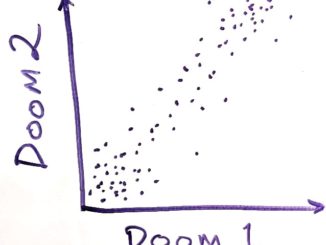

Against a General Factor of Doom

If you ask people a bunch of specific doomy questions, and their answers are suspiciously correlated, they might be expressing their p(Doom) for each question instead of answering the questions individually. Using a general factor of doom is unlikely to be an accurate depiction of reality. The future is likely to be surprisingly doomy in some ways and surprisingly tractable in others.

Blog

Blog

AI safety work

Arguments for AI risk

Arguments for AI risk

Arguments for AI risk

Arguments for AI risk