blog

Blog

Blog

Blog

Blog

Blog

You Can’t Predict a Game of Pinball

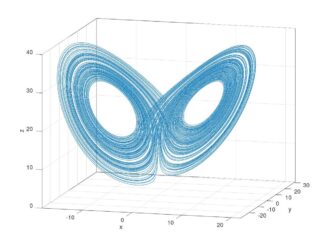

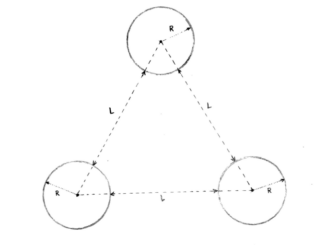

The uncertainty in the location of the pinball grows by a factor of about 5 every time the ball collides with one of the disks. After 12 bounces, an initial uncertainty in position the size of an atom grows to be as large as the disks themselves. Since you cannot measure the location of a pinball with more than atom-scale precision, it is in principle impossible to predict the motion of a pinball as it bounces between the disks for more than 12 bounces.

Blog

Blog

Blog

Blog

Blog