Rick Korzekwa, 11 April 2023, updated 13 April 2023

At AI Impacts, we’ve been looking into how people, institutions, and society approach novel, powerful technologies. One part of this is our technological temptations project, in which we are looking into cases where some actors had a strong incentive to develop or deploy a technology, but chose not to or showed hesitation or caution in their approach. Our researcher Jeffrey Heninger has recently finished some case studies on this topic, covering geoengineering, nuclear power, and human challenge trials.

This document summarizes the lessons I think we can take from these case studies. Much of it is borrowed directly from Jeffrey’s written analysis or conversations I had with him, some of it is my independent take, and some of it is a mix of the two, which Jeffrey may or may not agree with. All of it relies heavily on his research.

The writing is somewhat more confident than my beliefs. Some of this is very speculative, though I tried to flag the most speculative parts as such.

Summary

Jeffrey Heninger investigated three cases of technologies that create substantial value, but were not pursued or pursued more slowly

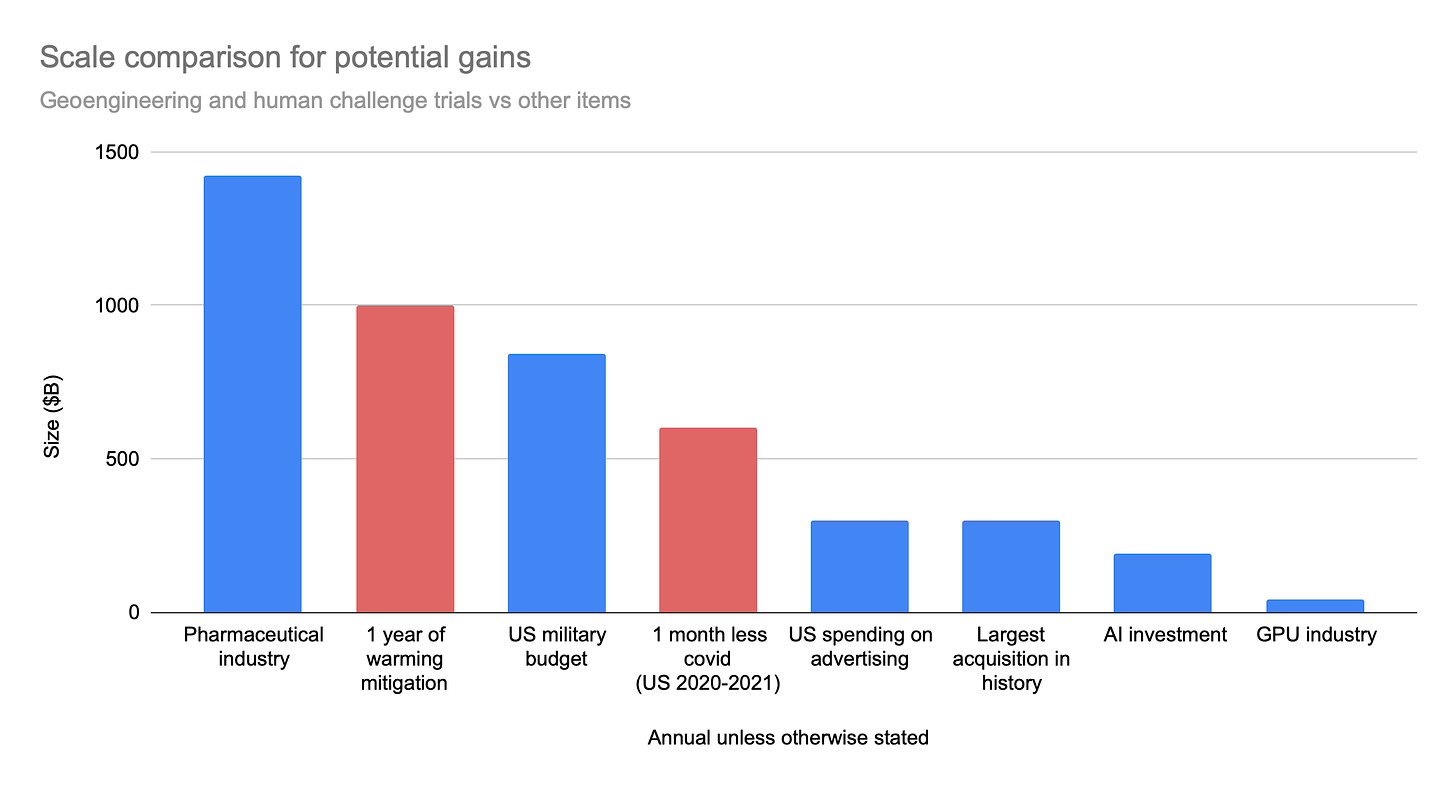

The overall scale of value at stake was very large for these cases, on the order of hundreds of billions to trillions of dollars. But it’s not clear who could capture that value, so it’s not clear whether the temptation was closer to $10B or $1T.

Social norms can generate strong disincentives for pursuing a technology, especially when combined with enforceable regulation.

Scientific communities and individuals within those communities seem to have particularly high leverage in steering technological development at early stages.

Inhibiting deployment can inhibit development for a technology over the long term, at least by slowing cost reductions.

Some of these lessons are transferable to AI, at least enough to be worth keeping in mind.

Overview of cases

- Geoengineering could feasibly provide benefits of $1-10 trillion per year through global warming mitigation, at a cost of $1-10 billion per year, but actors who stand to gain the most have not pursued it, citing a lack of research into its feasibility and safety. Research has been effectively prevented by climate scientists and social activist groups.

- Nuclear power has proliferated globally since the 1950s, but many countries have prevented or inhibited the construction of nuclear power plants, sometimes at an annual cost of tens of billions of dollars and thousands of lives. This is primarily done through legislation, like Italy’s ban on all nuclear power, or through costly regulations, like safety oversight in the US that has increased the cost of plant construction in the US by a factor of ten.

- Human challenge trials may have accelerated deployment of covid vaccines by more than a month, saving many thousands of lives and billions or trillions of dollars. Despite this, the first challenge trial for a covid vaccine was not performed until after several vaccines had been tested and approved using traditional methods. This is consistent with the historical rarity of challenge trials, which seems to be driven by ethical concerns and enforced by institutional review boards.

Scale

The first thing to notice about these cases is the scale of value at stake. Mitigating climate change could be worth hundreds of billions or trillions of dollars per year, and deploying covid vaccines a month sooner could have saved many thousands of lives. While these numbers do not represent a major fraction of the global economy or the overall burden of disease, they are large compared to many relevant scales for AI risk. The world’s most valuable companies have market caps of a few trillion dollars, and the entire world spends around two trillion dollars per year on defense. In comparison, annual funding for AI is on the order of $100B.1

Setting aside for the moment who could capture the value from a technology and whether the reasons for delaying or forgoing its development are rational or justified, I think it is worth recognizing that the potential upsides are large enough to create strong incentives.

Social norms

My read on these cases is that a strong determinant for whether a technology will be pursued is social attitudes toward the technology and its regulation. I’m not sure what would have happened if Pfizer had, in defiance of FDA standards and medical ethics norms, infected volunteers with covid as part of their vaccine testing, but I imagine it would have been more severe than fines or difficulty obtaining FDA approval. They would have lost standing in the medical community and possibly been unable to continue existing as a company. This goes similarly for other technologies and actors. Building nuclear power plants without adhering to safety standards is so far outside the range of acceptable actions that even suggesting it as a strategy for running a business or addressing climate change is a serious risk to reputation for a CEO or public official. An oil company executive who finances a project to disperse aerosols into the upper atmosphere to reduce global warming and protect his business sounds like a Bond movie villain.

This is not to suggest that social norms are infinitely strong or that they are always well-aligned with society’s interests. Governments and corporations will do things that are widely viewed as unethical if they think they can get away with it, for example, by doing it in secret.2 And I think that public support for our current nuclear safety regime is gravely mistaken. But strong social norms, either against a technology or against breaking regulations do seem able, at least in some cases, to create incentives strong enough to constrain valuable technologies.

The public

The public plays a major role in defining and enforcing the range of acceptable paths for technology. Public backlash in response to early challenge trials set the stage for our current ethics standards, and nuclear power faces crippling safety regulations in large part because of public outcry in response to a perceived lack of acceptable safety standards. In both of these cases, the result was not just the creation of regulations, but strong buy-in and a souring of public opinion on a broad category of technologies.3

Although public opposition can be a powerful force in expelling things from the Overton window, it does not seem easy to predict or steer. The Chernobyl disaster made a strong case for designing reactors in a responsible way, but it was instead viewed by much of the public as a demonstration that nuclear power should be abolished entirely. I do not have a strong take on how hard this problem is in general, but I do think it is important and should be investigated further.

The scientific community

The precise boundaries of acceptable technology are defined in part by the scientific community, especially when technologies are very early in development. Policy makers and the public tend to defer to what they understand to be the official, legible scientific view when deciding what is or is not okay. This does not always match with actual views of scientists.

Geoengineering as an approach to reducing global warming has not been recommended by the IPCC, and a minority of climate scientists support research into geoengineering. Presumably the advocacy groups opposing geoengineering experiments would have faced a tougher battle if the official stance from the climate science community were in favor of geoengineering.

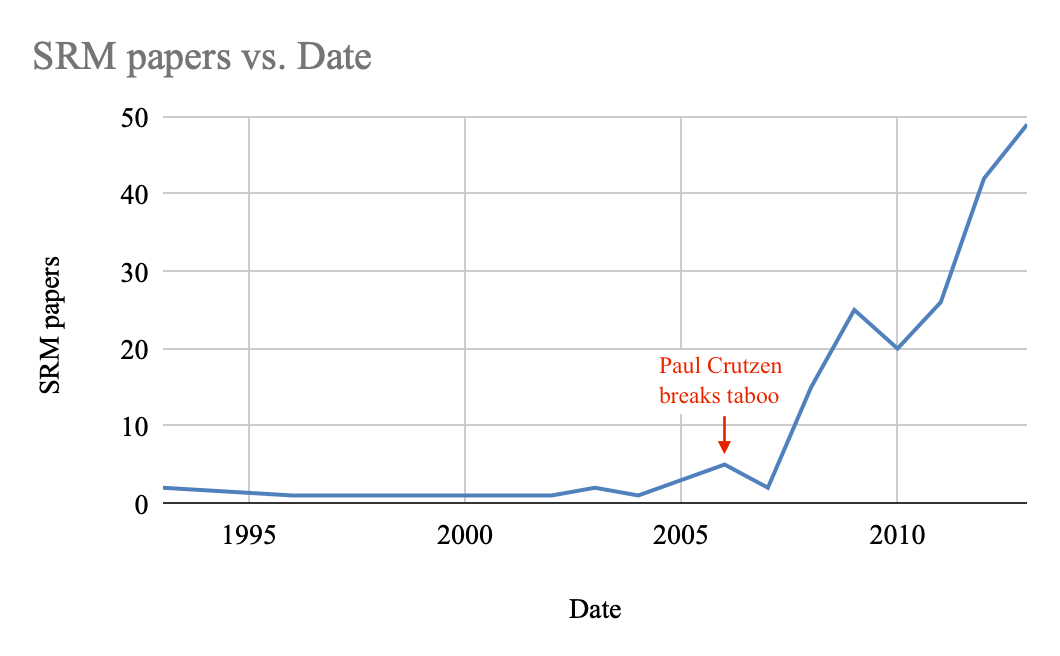

One interesting aspect of this is that scientific communities are small and heavily influenced by individual prestigious scientists. The taboo on geoengineering research was broken by the editor of a major climate journal, after which the number of papers on the topic increased by more than a factor of 20 after two years.4

I suspect the public and policymakers are not always able to tell the difference between the official stance of regulatory bodies and the consensus of scientific communities. My impression is that scientific consensus is not in favor of radiation health models used by the Nuclear Regulatory Commission, but many people nonetheless believe that such models are sound science.

Warning shots

Past incidents like the Fukushima disaster and the Tuskegee syphilis study are frequently cited by opponents of nuclear power and human challenge trials. I think this may be significant, because it suggests that these “warning shots” have done a lot to shape perception of these technologies, even decades later. One interpretation of this is that, regardless of why someone is opposed to something, they benefit from citing memorable events when making their case. Another, non-competing interpretation is that these events are causally important in the trajectory of these technologies’ development and the public’s perception of them.

I’m not sure how to untangle the relative contribution of these effects, but either way, it suggests that such incidents are important for shaping and preserving norms around the deployment of technology.

Locality

In general, social norms are local. Building power plants is much more acceptable in France than it is in Italy. Even if two countries allow the construction of nuclear power plants and have similarly strong norms against breaking nuclear safety regulations, those safety regulations may be different enough to create a large difference in plant construction between countries, as seen with the US and France.

Because scientific communities have members and influence across international borders, they may have more sway over what happens globally (as we’ve seen with geoengineering), but this may be limited by local differences in the acceptability of going against scientific consensus.

Development trajectories

A common feature of these cases is that preventing or limiting deployment of the technology inhibited its development. Because less developed technologies are less useful and harder to trust, this seems to have helped reduce deployment.

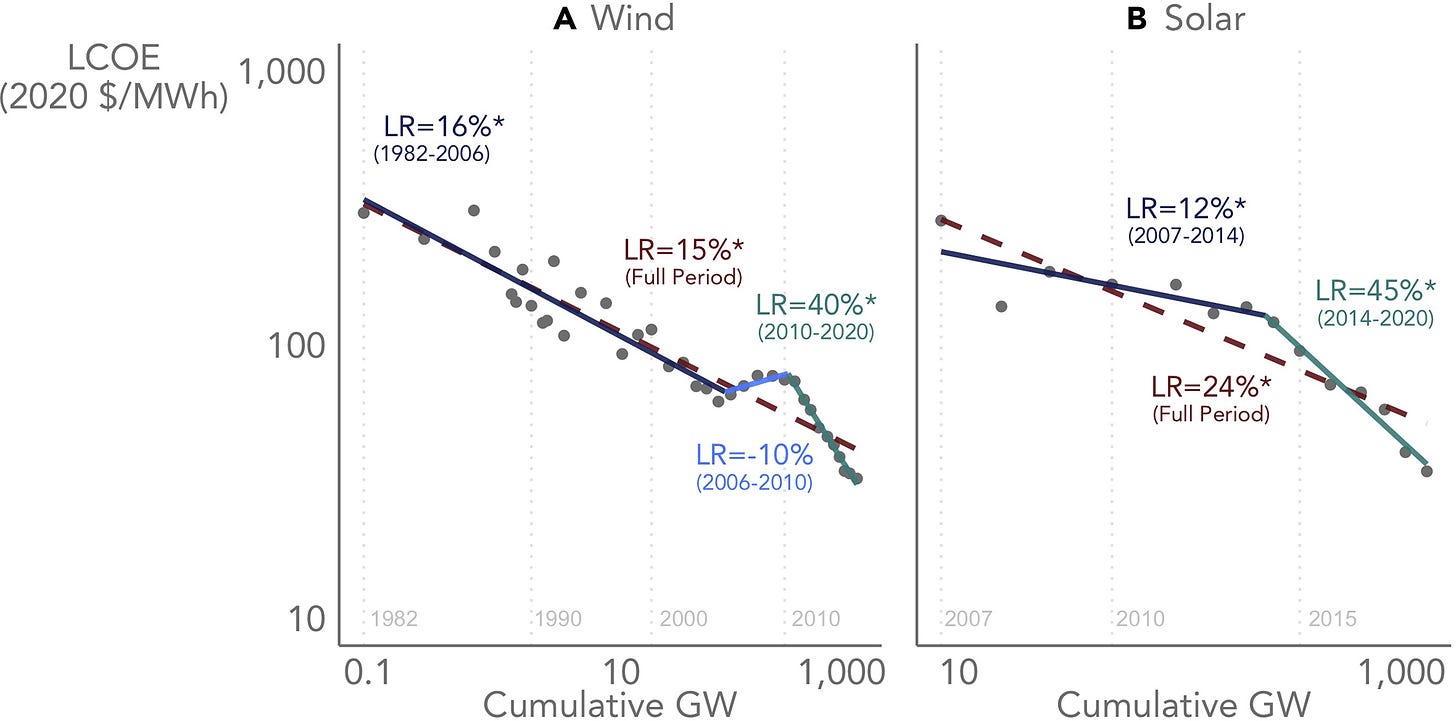

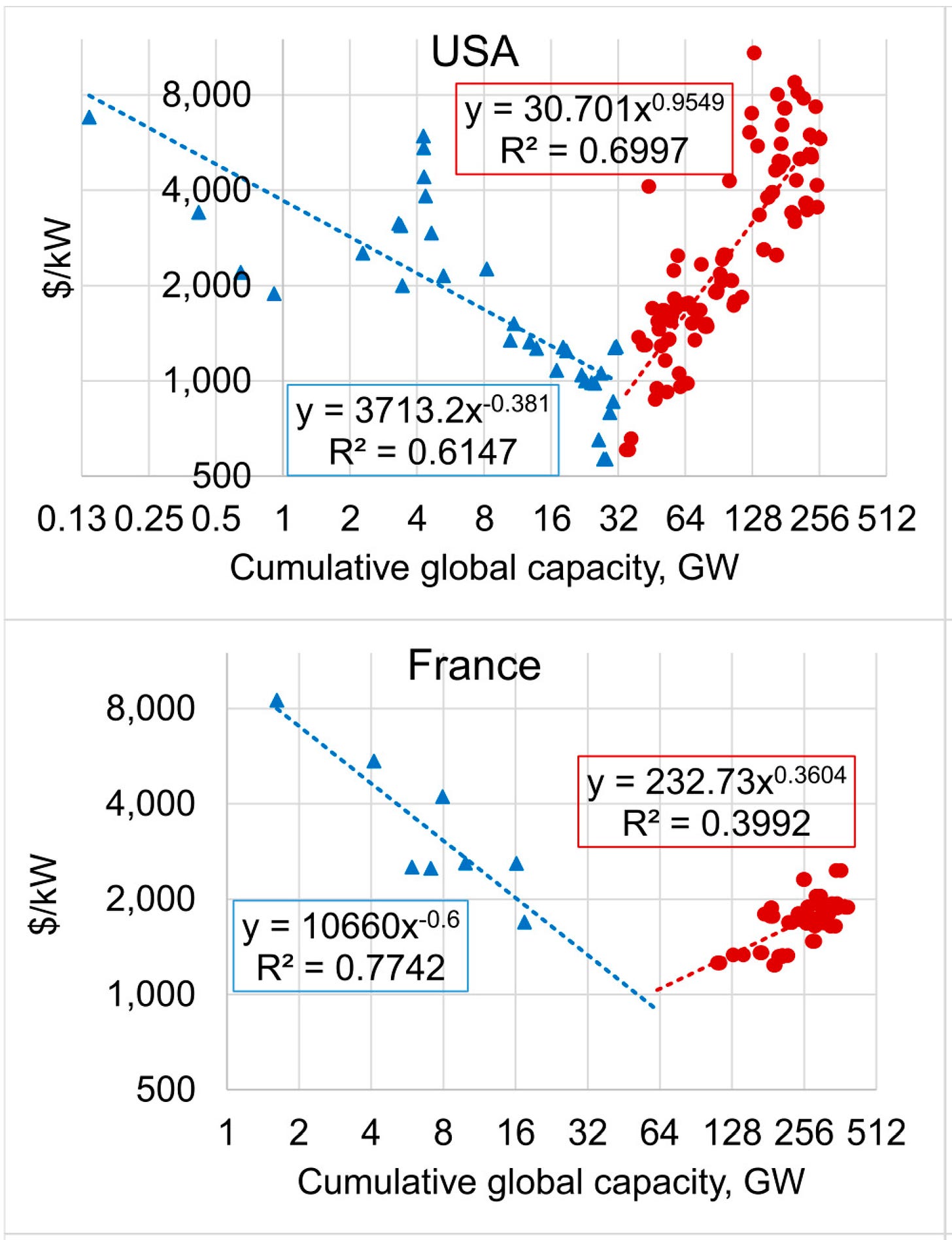

Normally, things become cheaper to make as we make more of them in a somewhat predictable way. The cost goes down with the total amount that has been produced, following a power law. This is what has been happening with solar and wind power.5

Initially, building nuclear power plants seems to have become cheaper in the usual way for new technology—doubling the total capacity of nuclear power plants reduced the cost per kilowatt by a constant fraction. Starting around 1970, regulations and public opposition to building plants did more than increase construction costs in the near term. By reducing the number of plants built and inhibiting small-scale design experiments, it slowed the development of the technology, and correspondingly reduced the rate at which we learned to build plants cheaply and safely.6 Absent reductions in cost, they continue to be uncompetitive with other power generating technologies in many contexts.

Because solar radiation management acts on a scale of months-to-years and the costs of global warming are not yet very high, I am not surprised that we have still not deployed it. But this does not explain the lack of research, and one of the reasons given for opposition to experiments is that it has not been shown to be safe. But the reason we lack evidence on safety is because research has been opposed, even at small scales.

It is less clear to me how much the relative lack of human challenge trials in the past7 has made us less able to do them well now. I’m also not sure how much a stronger past record of challenge trials would cause them to be viewed more positively. Still, absent evidence that medical research methodology does not improve in the usual way with quantity of research, I expect we are at least somewhat less effective at performing human challenge trials than we otherwise would be.

Separating safety decisions from gains of deployment

I think it’s impressive that regulatory bodies are able to prevent use of technology even when the cost of doing so is on the scale of many billions, plausibly trillions of dollars. One of the reasons this works seems to be that regulators will be blamed if they approve something and it goes poorly, but they will not receive much credit if things go well. Similarly, they will not be held accountable for failing to approve something good. This creates strong incentives for avoiding negative outcomes while creating little incentive to seek positive outcomes. I’m not sure if this asymmetry was deliberately built into the system or if it is a side effect of other incentive structures (e.g, at the level of politics, there is more benefit from placing blame than there is from giving credit), but it is a force to be reckoned with, especially in contexts where there is a strong social norm against disregarding the judgment of regulators.

Who stands to gain

It is hard to assess which actors are actually tempted by a technology. While society at large could benefit from building more nuclear power plants, much of the benefit would be dispersed as public health gains, and it is difficult for any particular actor to capture that value. Similarly, while many deaths could have been prevented if the covid vaccines had been available two months earlier, it is not clear if this value could have been captured by Pfizer or Moderna–demand for vaccines was not changing that quickly.

On the other hand, not all the benefits are external–switching from coal to nuclear power in the US could save tens of billions of dollars a year, and drug companies pay billions of dollars per year for trials. Some government institutions and officials have the stated goal of creating benefits like public health, in addition to economic and reputational stakes in outcomes like the quick deployment of vaccines during a pandemic. These institutions pay costs and make decisions on the basis of economic and health gains from technology (for example, subsidizing photovoltaics and obesity research), suggesting they have incentive to create that value.

Overall, I think this lack of clarity around incentives and capture of value is the biggest reason for doubt that these cases demonstrate strong resistance to technological temptation.

What this means for AI

How well these cases generalize to AI will depend on facts about AI that are not yet known. For example, if powerful AI requires large facilities and easily-trackable equipment, I think we can expect lessons from nuclear power to be more transferable than if it can be done at a smaller scale with commonly-available materials. Still, I think some of what we’ve seen in these cases will transfer to AI, either because of similarity with AI or because they reflect more general principles.

Social norms

The main thing I expect to generalize is the power of social norms to constrain technological development. While it is far from guaranteed to prevent irresponsible AI development, especially if building dangerous AI is not seen as a major transgression everywhere that AI is being developed, it does seem like the world is much safer if building AI in defiance of regulations is seen as similarly villainous to building nuclear reactors or infecting study participants without authorization. We are not at that point, but the public does seem prepared to support concrete limits on AI development.

I do think there are reasons for pessimism about norms constraining AI. For geoengineering, the norms worked by tabooing a particular topic in a research community, but I’m not sure if this will work with a technology that is no longer in such an early stage. AI already has a large body of research and many people who have already invested their careers in it. For medical and nuclear technology, the norms are powerful because they enforce adherence to regulations, and those regulations define the constraints. But it can be hard to build regulations that create the right boundaries around technology, especially something as imprecise-defined as AI. If someone starts building a nuclear power plant in the US, it will become clear relatively early on that this is what they are doing, but a datacenter training an AI and a datacenter updating a search engine may be difficult to tell apart.

Another reason for pessimism is tolerance for failure. Past technologies have mostly carried risks that scaled with how much of the technology was built. For example, if you’re worried about nuclear waste, you probably think two power plants are about twice as bad as one. While risk from AI may turn out this way, it may be that a single powerful system poses a global risk. If this does turn out to be the case, then even if strong norms combine with strong regulation to achieve the same level of success as for nuclear power, it still will not be adequate.

Development gains from deployment

I’m very uncertain how much development of dangerous AI will be hindered by constraints on deployment. I think approximately all technologies face some limitations like this, in some cases very severe limitations, as we’ve seen with nuclear power. But we’re mainly interested in the gains to development toward dangerous systems, which may be possible to advance with little deployment. Adding to the uncertainty, there is ambiguity where the line is drawn between testing and deployment or whether allowing the deployment of verifiably safe systems will provide the gains needed to create dangerous systems.

Separating safety decisions from gains

I do not see any particular reason to think that asymmetric justice will operate differently with AI, but I am uncertain whether regulatory systems around AI, if created, will have such incentives. I think it is worth thinking about IRB-like models for AI safety.

Capture of value

It is obvious there are actors who believe they can capture substantial value from AI (for example Microsoft recently invested $10B in OpenAI), but I’m not sure how this will go as AI advances. By default, I expect the value created by AI to be more straightforwardly capturable than for nuclear power or geoengineering, but I’m not sure how it differs from drug development.

Social preview image: German anti-nuclear power protesters in 2012. Used under Creative Commons license from Bündnis 90/Die Grünen Baden-Württemberg Flickr

Notes

- See our page on funding for AI companies and the 2023 AI Index report.

- Biological weapons research by the USSR is the best example of this that comes to mind.

- More speculatively, this may be important for geoengineering. Small advocacy groups were able to stop experiments with solar radiation management for reasons that are still not completely clear to me, but I think part of it is public suspicion toward attempts to manipulate the environment.

- Oldham, Paul, Bronislaw Szerszynski, Jack Stilgoe, Calum Brown, Bella Eacott, and Andy Yuille. “Mapping the landscape of climate engineering.” Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences 372, no. 2031 (2014): 20140065.

- Bolinger, Mark, Ryan Wiser, and Eric O’Shaughnessy. “Levelized cost-based learning analysis of utility-scale wind and solar in the United States.” Iscience 25, no. 6 (2022): 104378.

- Lang, Peter A. 2017. “Nuclear Power Learning and Deployment Rates; Disruption and Global Benefits Forgone” Energies 10, no. 12: 2169. https://doi.org/10.3390/en10122169

- There were at least 60 challenge trials globally between 1970 and 2018 spread across 25 pathogens. According to the WHO, there have been 6,000 intervention-based clinical trials just for covid (though keep in mind the fraction of these that would benefit from deliberately infecting patients may be fairly small)