By Katja Grace, 5 June 2015

For the last little while, we’ve been looking into a dataset of individual AI predictions, collected by MIRI a couple of years ago. We also previously gathered all the surveys about AI predictions that we could find. Together, these are all the public predictions of AI that we know of. So we just wrote up a quick summary of what we have so far.

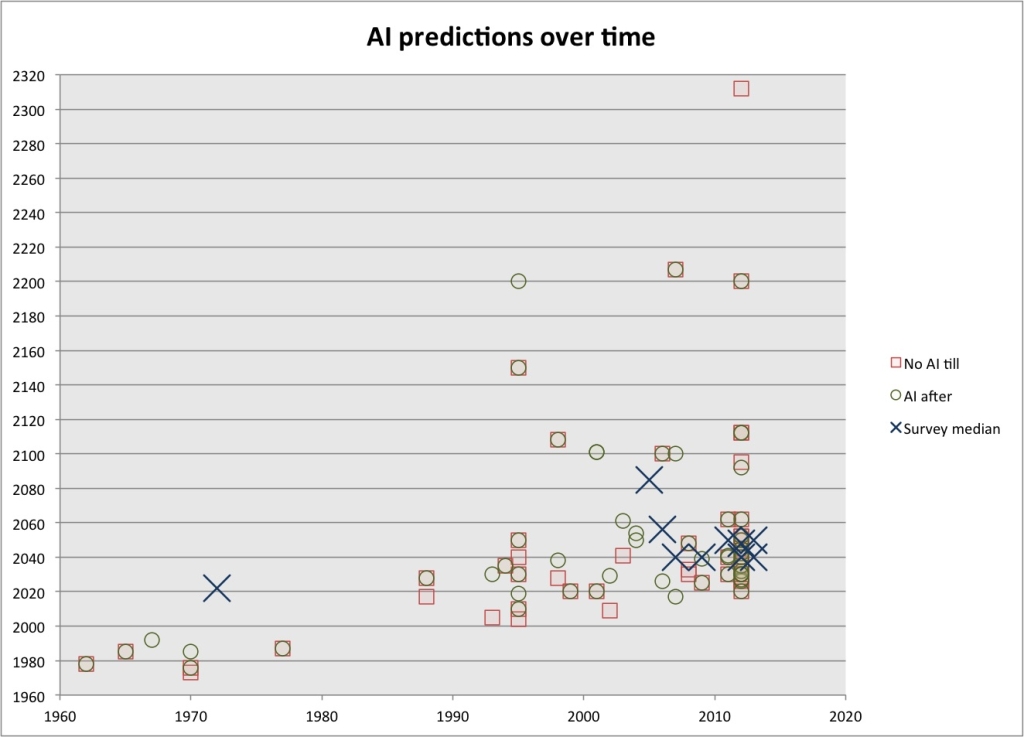

Here’s a picture of most of the predictions, from our summary:

Recent surveys seem to pretty reliably predict AI between 2040 and 2050, as you can see. The earlier surveys which don’t fit this trend also had less uniform questions, whereas the last six surveys ask about the year in which there is a 50% chance that (something like) human-level AI will exist. The entire set of individual predictions has a median somewhere in the 2030s, depending on how you count. However for predictions made since 2000, the median is 2042 (minPY), in line with the surveys. The surveys that ask also consistently get median dates for a 10% chance of AI in the 2020s.

This consistency seems interesting, and these dates seem fairly soon. If we took these estimates seriously, and people really meant at least ‘AI that could replace most humans in their jobs’, the predictions of ordinary AI researchers seem pretty concerning. 2040 is not far off, and the 2020s seem too close for us to be prepared to deal with moderate chances of AI, at the current pace.

We are not sure what to make of these predictions. Predictions about AI are frequently distrusted, though often alongside complaints that seem weak to us. For instance that people are biased to predict AI twenty years in the future, or just before their own deaths; that AI researchers have always been very optimistic and continually proven wrong; that experts and novices make the same predictions (Edit (6/28/2016): now found to be based on an error); or that failed predictions of the past look like current predictions. There really do seem to be selection biases, from people who are optimistic about AGI working in the field for instance, and from shorter predictions being more published. However there are ways to avoid these.

There seem to be a few good reasons to distrust these predictions however. First, it’s not clear that people can predict these kinds of events well in any field, at least without the help of tools. Relatedly, it’s not clear what tools and other resources people used in the creation of these predictions. Did they model the situation carefully, or just report their gut reactions? My guess is near the ‘gut reaction’ end of the spectrum, based on looking for reasoning and finding only a little. Often gut reactions are reliable, but I don’t expect them to be so, on their own, in an area such as forecasting novel and revolutionary technologies.

Thirdly, phrases like, ‘human-level AI arrives’ appear to stand for different events for different people. Sometimes people are talking about almost perfect human replicas, sometimes software entities that can undercut a human at work without resembling them much at all, sometimes human-like thinking styles which are far from being able to replace us. Sometimes they are talking about human-level abilities at human cost, sometimes at any cost. Sometimes consciousness is required, sometimes poetry is, sometimes calculating ability suffices. Our impressions from talking to people are that ‘AI predictions’ mean a wide variety of things. So the collection of predictions is probably about different events, which we might reasonably expect to happen at fairly different times. Before trusting experts here, it seems key to check we know what they are talking about.

Given all of these things, I don’t trust these predictions a huge amount. However I expect they are somewhat informative, and there are not a lot of good sources to trust at present.

The next things I’d like to know in this area:

- What do experts actually believe about human-level AI timelines, if you check fairly thoroughly that they are talking about what you think they are talking about, and aren’t making obviously different assumptions about other matters?

- How reliable are similar predictions? For instance, predictions of novel technologies, predictions of economic upheaval, predictions of disaster?

- Why do the results of the Hanson survey conflict with the other surveys?

- How do people make the predictions they make? (e.g. How often are they thinking of hardware trends? Using intuition? Following the consensus of others?)

- Why are AGI researchers so much more optimistic than AI researchers, and are AI researchers so much more optimistic than others?

- What disagreements between AI researchers produce their different predictions?

- What do AI researchers know that informs their predictions that people outside the field (like me) do not know? (What do they know that doesn’t inform their predictions, but should?)

Hopefully we’ll be looking more into some of these things soon.

The overall problem with any futurist “prediction” is its syllogism that needs at least one premise which is itself an assumption on future events and therefore can not currently be confirmed. And: It often needs many more unconfirmed premises on complex adaptive systems like our ecologic and economic environment is.

Hence, futurists can only describe scenarios – which only occur if all assumptions turn out to be right. Serious futurology should disclose its assumptions.

I gotta bookmark this website it seems handy handy.

This update is pretty big in my opinion, by the way i also have some time to review some of my project too, perfect timing.