By Katja Grace, 7 February 2015

Often, when people are asked ‘when will human-level AI arrive?’ they suggest that it is a meaningless or misleading term. I think they have a point. Or several, though probably not as many as they think they have.

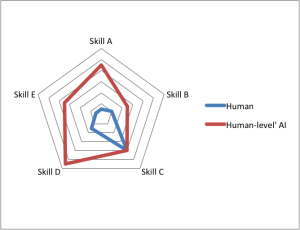

One problem is that if the skills of an AI are developing independently at different rates, then at the point that an AI eventually has the full kit of human skills, they also have a bunch of skills that are way past human-level. For instance, if a ‘human-level’ AI were developed now, it would be much better than human-level at arithmetic.

Thus the term ‘human-level’ is misleading because it invites an image of an AI which competes on even footing with the humans, rather than one that is at least as skilled as a human in every way, and thus what we would usually think of as extremely superhuman.

Another problem is that the term is used to mean multiple things, which then get confused with each other. One such thing is a machine which replicates human cognitive behavior, at any cost. Another is a machine which replicates human cognitive behavior at the price of a human. The former could plausibly be built years before the latter, and should arguably not be nearly as economically exciting. Yet often people imagine the two events coinciding, seemingly for lack of conceptual distinction.

Should we use different terms for human level at human cost, and human level at any cost? Should we have a different term altogether, which evokes at-least-human capabilities? I’ll leave these questions to you. For now we just made a disambiguation page.

Nice Articles Its A Helpful Thank You……