Articles by Katja Grace

AI Timelines

Blog

Blog

AI Timelines

Blog

Blog

AI Timelines

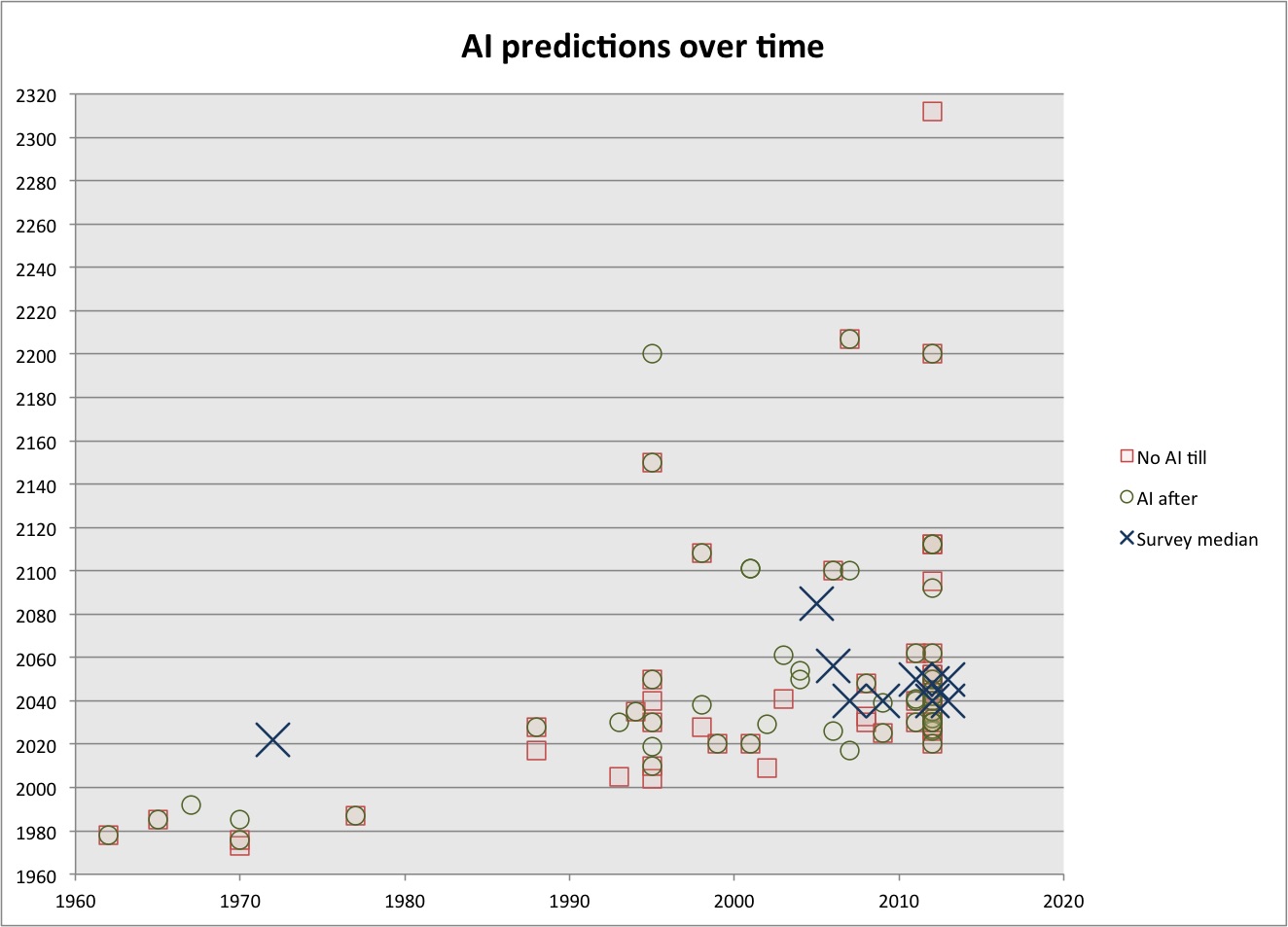

Accuracy of AI Predictions

Accuracy of AI Predictions

Accuracy of AI Predictions