Updated 9 November 2020

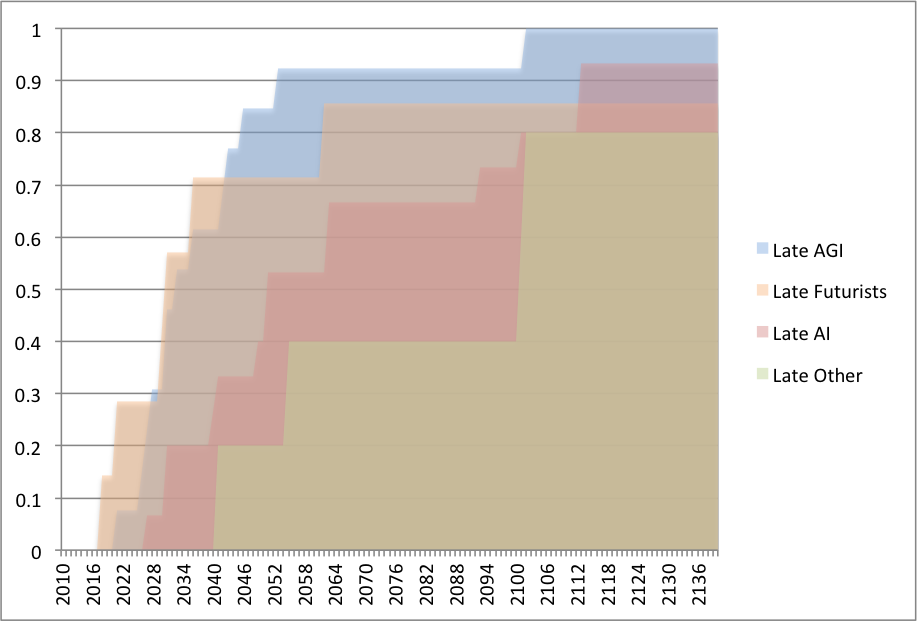

In 2015 AGI researchers appeared to expect human-level AI substantially sooner than other AI researchers. The difference ranges from about five years to at least about sixty years as we move from highest percentiles of optimism to the lowest. Futurists appear to be around as optimistic as AGI researchers. Other people appear to be substantially more pessimistic than AI researchers.

Details

MIRI dataset

We categorized predictors in the MIRI dataset as AI researchers, AGI (artificial general intelligence) researchers, Futurists and Other. We also interpreted their statements into a common format, roughly corresponding to the first year in which the person appeared to be suggesting that human-level AI was more likely than not (see ‘minPY’ described here).

Recent (since 2000) predictions are shown in the figure below. Those made by people working on AGI specifically tended to be decades more optimistic than those at the same percentile of optimism working in other areas of AI. The difference ranged from around five years to at least around sixty years as we move from the soonest predictions to the latest. Those who worked in AI broadly tended to be at least a decade more optimistic than ‘others’, at any percentile of optimism within their group. Futurists were about as optimistic as AGI researchers.

Note that these predictions were made over a period of at least 12 years, rather than at the same time.

Median predictions are shown below (these are also minPY predictions as defined on the MIRI dataset page, calculated from ‘cumulative distributions’ sheet in updated dataset spreadsheet also available there).

| Median AI predictions | AGI | AI | Futurist | Other | All |

| Early (pre-2000) (warning: noisy) | 1988 | 2031 | 2036 | 2025 | |

| Late (since 2000) | 2033 | 2051 | 2031 | 2101 | 2042 |

FHI survey data

The FHI survey results suggest that people’s views are not very different if they work in computer science or other parts of academia. We have not investigated this evidence in more detail.

Implications

Biases from optimistic predictors and information asymmetries: Differences of opinion among groups who predict AI suggest that either some groups have more information, or that biases exist between predictions made by the groups (e.g. even among unbiased but noisy forecasters, if only people most optimistic about a field enter it, then the views of those in the field will be biased toward optimism) . Either of these is valuable to know about, so that we can either look into the additional information, or try to correct for the biases.

1 Trackback / Pingback

Comments are closed.